DeepSeek Introduces DeepSeek-R1-Lite-Preview with Complete Reasoning Outputs Matching OpenAI o1

Artificial intelligence (AI) models have made substantial progress over the last few years, but they continue to face critical challenges, particularly in reasoning tasks. Large language models are proficient at generating coherent text, but when it comes to complex reasoning or problem-solving, they often fall short. This inadequacy is particularly evident in areas requiring structured, step-by-step logic, such as mathematical reasoning or code-breaking. Despite their impressive generative capabilities, models tend to lack transparency in their thought processes, which limits their reliability. Users are often left guessing how a conclusion was reached, leading to a trust gap between AI outputs and user expectations. To address these issues, there is a growing need for models that can provide comprehensive reasoning, clearly showing the steps that led to their conclusions.

DeepSeek-R1-Lite-Preview: A New Approach to Transparent Reasoning

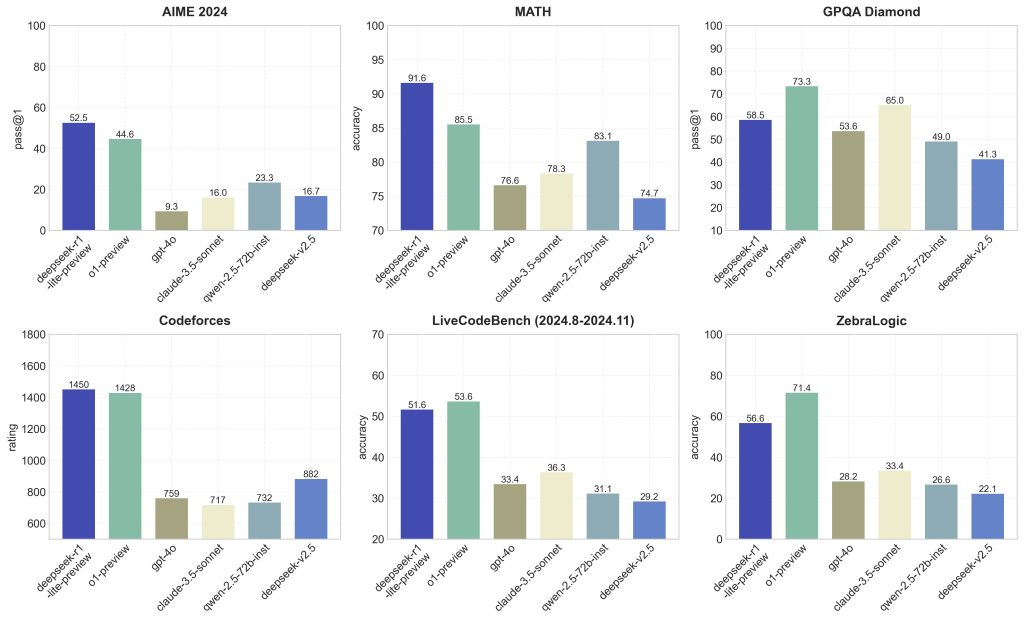

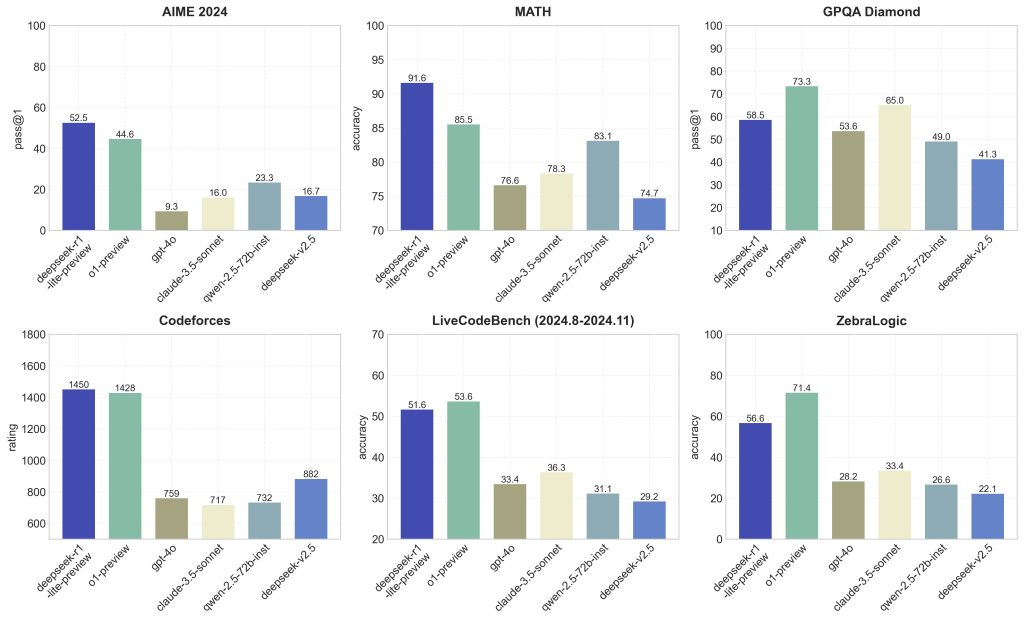

DeepSeek has made progress in addressing these reasoning gaps by launching DeepSeek-R1-Lite-Preview, a model that not only improves performance but also introduces transparency in its decision-making process. The model matches OpenAI’s o1 preview-level performance and is now available for testing through DeepSeek’s chat interface, which is optimized for extended reasoning tasks. This release aims to tackle deficiencies in AI-driven problem-solving by offering complete reasoning outputs. DeepSeek-R1-Lite-Preview demonstrates its capabilities through benchmarks like AIME and MATH, positioning itself as a viable alternative to some of the most advanced models in the industry.

Technical Details

DeepSeek-R1-Lite-Preview provides a significant improvement in reasoning by incorporating Chain-of-Thought (CoT) reasoning capabilities. This feature allows the AI to present its thought process in real time, enabling users to follow the logical steps taken to reach a solution. Such transparency is crucial for users who require detailed insight into how an AI model arrives at its conclusions, whether they are students, professionals, or researchers. The model’s ability to tackle intricate prompts and display its thinking process helps clarify AI-driven results and instills confidence in its accuracy. With o1-preview-level performance on industry benchmarks like AIME (American Invitational Mathematics Examination) and MATH, DeepSeek-R1-Lite-Preview stands as a strong contender in the field of advanced AI models. Additionally, the model and its API are slated to be open-sourced, making these capabilities accessible to the broader community for experimentation and integration.

Significance and Results

DeepSeek-R1-Lite-Preview’s transparent reasoning outputs represent a significant advancement for AI applications in education, problem-solving, and research. One of the critical shortcomings of many advanced language models is their opacity; they arrive at conclusions without revealing their underlying processes. By providing a transparent, step-by-step chain of thought, DeepSeek ensures that users can see not only the final answer but also understand the reasoning that led to it. This is particularly beneficial for applications in educational technology, where understanding the “why” is often just as important as the “what.” In benchmark testing, the model displayed performance levels comparable to OpenAI’s o1 preview, specifically on challenging tasks like those found in AIME and MATH. One test prompt involved deciphering the correct sequence of numbers based on clues—tasks requiring multiple layers of reasoning to exclude incorrect options and arrive at the solution. DeepSeek-R1-Lite-Preview provided the correct answer (3841) while maintaining a transparent output that explained each step of the reasoning process.

Conclusion

DeepSeek’s introduction of DeepSeek-R1-Lite-Preview marks a noteworthy advancement in AI reasoning capabilities, addressing some of the critical shortcomings seen in current models. By matching OpenAI’s o1 in terms of benchmark performance and enhancing transparency in decision-making, DeepSeek has managed to push the boundaries of AI in meaningful ways. The real-time thought process and forthcoming open-source model and API release indicate DeepSeek’s commitment to making advanced AI technologies more accessible. As the field continues to evolve, models like DeepSeek-R1-Lite-Preview could bring clarity, accuracy, and accessibility to complex reasoning tasks across various domains. Users now have the opportunity to experience a reasoning model that not only provides answers but also reveals the reasoning behind them, making AI both more understandable and trustworthy.

Check out the Official Tweet and Try it here. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI VIRTUAL CONFERENCE] SmallCon: Free Virtual GenAI Conference ft. Meta, Mistral, Salesforce, Harvey AI & more. Join us on Dec 11th for this free virtual event to learn what it takes to build big with small models from AI trailblazers like Meta, Mistral AI, Salesforce, Harvey AI, Upstage, Nubank, Nvidia, Hugging Face, and more.

The post DeepSeek Introduces DeepSeek-R1-Lite-Preview with Complete Reasoning Outputs Matching OpenAI o1 appeared first on MarkTechPost.

Leave a Comment